INTRODUCING

Lemma

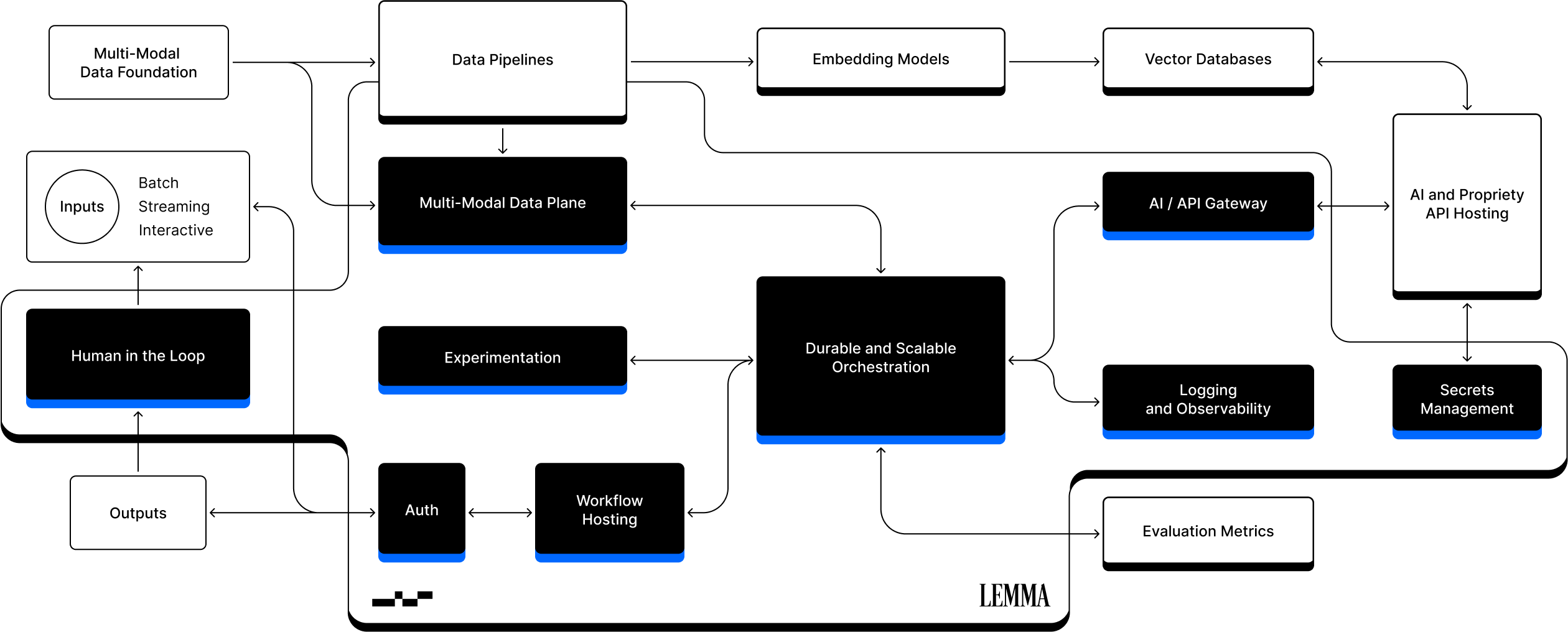

The Composable AI Orchestration platform designed to bridge the gap between AI potential and secure, reliable adoption and implementation.

Meet the Primitives

Using six simple primitives users can build workflows and agents of infinite complexity.

Lemma Worker

A Worker is the fundamental building block for creating automated workflows in the Lemma platform.

It represents an immutable, complete process defined as a sequence of interconnected States that can span across AI models, databases, ETL systems, and applications.

State

A State is the fundamental unit of execution within a Lemma Worker. Each State represents a single, distinct step or operation in the overall automated workflow.

Workers are composed of sequences of interconnected States that define the logic, data transformation, and flow control of the process.

Function

A Function performs a specific action within a State by making an API call. Functions are designed to be modular and easily integrated into different Workers.

The Lemma platform supports multiple protocols for Functions such as REST and gRPC, allowing for flexibility in how you interact with external services.

Connection

A Connection ingests data from a data source like a Database or an API and triggers a Run of a Worker using the incoming data.

Each Connection can be configured to pull specific data from a data source at a pre-configured frequency or stream. The data being processed can either be structured, semi-structured, or unstructured.

Run

A Run is the fundamental execution unit of a Worker.

A Worker Run represents a single, self-contained execution of a specific Worker, processing a defined set of input data and producing output data or side effects.

Context

The Context is an interface by which the data for a Run, the execution of a Worker, can be accessed.

The Context is represented in a JSON-structure and can be queried using standard JQ expressions.

Lemma’s Distinctive Features

Unlike specific vertical AI applications, Lemma can integrate combinations of models and services, infrastructure, and applications into one cohesive system to solve complex workflows securely and at scale.

Human-in-the-loop Guardrails

Integrate human judgment and guardrails into automated workflows with clear interfaces, enabling real-time oversight and corrections.

Dynamic Workflow Planning and Execution

Support for non-deterministic, “Agent” multi-step execution by securely and dynamically generating a workflow path at runtime.

Powerful, Flexible AI API Gateway

Streamlined integration across REST and gRPC APIs, different authentication policies; set up advanced security protections and flexible constraints.

Experiment and Fallback with Resiliency

Easily experiment, run models in parallel, and configure fallback models to ensure uninterrupted workflow execution.

Observable, Secure Non-Human Identity Management

Monitor and govern Non-Human Identities (NHI) powering any workflows built with Lemma in secure NHI management layer.

Agnostic to Model Service or Cloud Provider

Avoid vendor lock-in and ensure system adaptability with the ability to run and orchestrate models and services across different topologies and networks.

ETL Support for All Data Types

Seamlessly connect workflows to, and operate on, tabular, non-tabular, and unstructured data.

Streaming, Batch, and Interactive Execution

Adeptly handle workflows in singular or multiple execution modalities, ensuring versatile and efficient processing for all types of tasks.

PROBLEM

Why is it hard to connect AI across your stack?

AI Models and Data Come

in Different Shapes and Sizes

There are no unified standards around data models for AI services. By design, Classical and Generative AI models are built to operate on all kinds of data, structured and unstructured alike. AI services require a data translation layer.

AI Should be Trustworthy,

But Often Needs Human Augmentation

Advancements in AI have opened up endless possibilities for innovation, but AI workflows are iterative and can require guidance and direction. The proper human oversight and guardrails must exist at critical stages, to incorporate contextual understanding and subjective evaluation.

Most Enterprises Use Hundreds of Software Products, Some Legacy, Some Cutting Edge

Purpose built infrastructure is needed in order to connect and orchestrate AI across different products, platforms, and cloud providers, as well as to handle different error codes, authentication policies, and API protocols.

SOLUTION

Lemma Platform is the New AI-Enablement Layer