Powered by Lemma Series

/

Ruben Cepeda - Software Engineer at Thread AI

January 21, 2025

As part of our vision to democratize mission critical AI enablement software, one of our key tenets is to reduce the friction for customers on their AI journey. This friction may not only present itself in a variety of operational, organizational, or technical ways, but also at the procurement stage, often manifesting as a deployability or regulatory problem.

Today we are excited to publicly share that our platform Lemma is now available on Google Cloud Marketplace, empowering Google Cloud users with the ability to rapidly design, deploy, and manage sophisticated, AI-driven workflows that seamlessly integrate with Google Cloud services like BigQuery, the Vertex A platform, Gemini models, Workspace, and Cloud SQL. This investment was in direct response to some of our prospective customers’ desire to reduce the friction at procurement stages. We have made it easier than ever for our customers to go from zero to value-generating Lemma Workers, especially for those whose core, operational data lives across the various Google Cloud products. Customers can now start safely operationalizing AI within minutes while benefiting from the enhanced security of all the traffic being kept within Google Cloud.

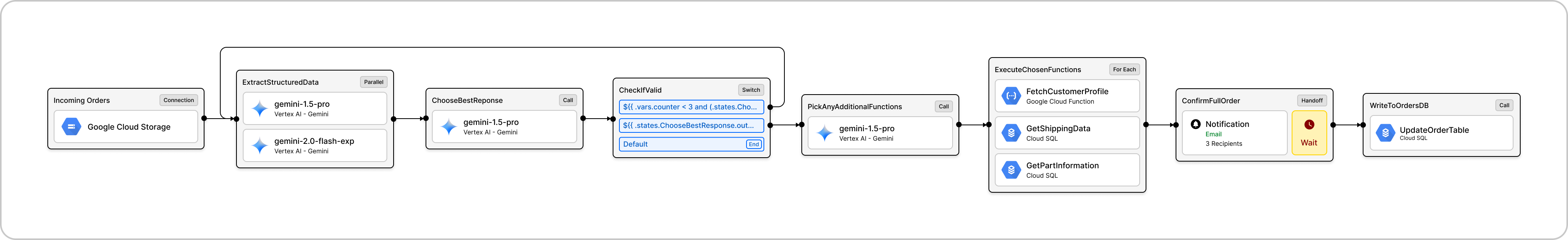

For Thread, deploying onto Google Cloud Marketplace is a major milestone of our growing multi-cloud offering. Users can now scale their workflows as they run on Google Cloud’s trusted global infrastructure. Below is a common Agent-style workflow built in the platform that leverages some of the most powerful tools available within the Google Cloud ecosystem, natively built to scale on top of the Google Kubernetes Engine (GKE).

The goal of this Worker is to extract accurate, structured information from incoming unstructured data from orders of equipment parts. After orders land in object storage (GCS), a Connector pulls the data into the Context of the Worker, then two versions of Vertex AI-powered Agents run in parallel to extract pre-defined JSON schema from the incoming order content. Another downstream Agent is leveraged as a judge to select the best response, additional checks are run after to see if either: the data is good to move on, the agents should try again, or the data is bad and the Worker should end. If the Worker continues into the next State, a final Agent checks the incoming extracted JSON data and determines whether 1, 2, 3, or no additional Functions need to be called to fetch information to complete the full Order. Depending on the choices of the Agent, and after any additional Functions are called, the Worker pauses in an optional Handoff State. A Handoff state is a special human-in-the-loop step in a Worker (Handoffs will be the focus of an upcoming engineering blog). Once the final contents have been approved, with any potential edits, by an operator - the finalized order is written into the official system of record in Cloud SQL.

A tenet of our infrastructure philosophy is the ease of composability.

Composability in Lemma is not only woven throughout our product, where every workflow represented as a Worker is made up of discrete, modular States, but also in our deployability vision. Being able to repeatably and natively package all, or components, of the infrastructure to meet customers where their infrastructure permits at scale is crucial as part of the evolving BYOC (Bring Your Own Cloud) landscape.

This effort reinforced the intentionality of each component of our system (e.g. API Gateways, Authentication, Data Plane, etc.) to allow for this deployment versatility. Every part of our software is composable, allowing a great flexibility for deployment modality across the various Kubernetes ecosystems.

As we continue to expand in our on-prem and air gapped offerings, we will also offer the option to deploy onto your private GKE for other Google Cloud Marketplace customers with more stringent network requirements as the next steps of this effort!